Scala Interview Questions1) What is Scala?

Scala is a general-purpose programming language. It supports object oriented, functional and imperative programming approaches. It is a strong static type language. In Scala, everything is an object whether it is a function or a number.It was designed by Martin Odersky in 2004.

2) What are the features of Scala?

There are following features in Scala:

3) What are the Data Types in Scala?

Data types in Scala are much similar to java in terms of their storage, length, except that in Scala there is no concept of primitive data types every type is an object and starts with capital letter. A table of data types is given in the tutorials.

4) What is pattern matching?

Pattern matching is a feature of Scala. It works same as switch case in other languages. It matches best case available in the pattern.

5) What is for-comprehension in Scala?

In Scala, for loop is known as for-comprehensions. It can be used to iterate, filter and return an iterated collection. The for-comprehension looks a bit like a for-loop in imperative languages, except that it constructs a list of the results of all iterations.

6) What is breakable method in Scala?

In Scala, there is no break statement but you can do it by using break method and importing Scala.util.control.Breaks._ package. It can break your code.

7) How to declare function in Scala?

In Scala, functions are first class values. You can store function value, pass function as an argument and return function as a value from other function. You can create function by using def keyword. You must mention return type of parameters while defining function and return type of a function is optional. If you don't specify return type of a function, default return type is Unit.

8) Why do we use =(equal) operator in Scala function.

You can create function with or without = (equal) operator. If you use it, function will return value. If you don't use it, your function will not return anything and will work like subroutine.

9) What is Function parameter with default value in Scala?

Scala provides a feature to assign default values to function parameters. It helps in the scenario when you don't pass value during function calling. It uses default values of parameters.

10) What is function named parameter in Scala?

In Scala function, you can specify the names of parameters during calling the function. You can pass named parameters in any order and can also pass values only.

11) What is higher order function in Scala?

Higher order function is a function that either takes a function as argument or returns a function. In other words we can say a function which works with function is called higher order function.

12) What is function composition in Scala?

In Scala, functions can be composed from other functions. It is a process of composing in which a function represents the application of two composed functions.

13) What is Anonymous (lambda) Function in Scala?

Anonymous function is a function that has no name but works as a function. It is good to create an anonymous function when you don't want to reuse it latter.

You can create anonymous function either by using ⇒ (rocket) or _ (underscore) wild card in Scala.

14) What is multiline expression in Scala?

Expressions those are written in multiple lines are called multiline expression. In Scala, be carefull while using multiline expressions.

15) What is function currying in Scala?

In Scala, method may have multiple parameter lists. When a method is called with a fewer number of parameter lists, then this will yield a function taking the missing parameter lists as its arguments.

In other words it is a technique of transforming a function that takes multiple arguments into a function that takes a single argument.

16) What is nexted function in Scala?

In Scala, you can define function of variable length parameters. It allows you to pass any number of arguments at the time of calling the function.

17) What is object in Scala?

Object is a real world entity. It contains state and behavior. Laptop, car, cell phone are the real world objects. Object typically has two characteristics:

1) State: data values of an object are known as its state.

2) Behavior: functionality that an object performs is known as its behavior.

Object in Scala is an instance of class. It is also known as runtime entity.

18) What is class in Scala?

Class is a template or a blueprint. It is also known as collection of objects of similar type.

In Scala, a class can contain:

19) What is anonymous object in Scala?

In Scala, you can create anonymous object. An object which has no reference name is called anonymous object. It is good to create anonymous object when you don't want to reuse it further.

20) What is constructor in Scala?

In Scala, constructor is not special method. Scala provides primary and any number of auxiliary constructors.

In Scala, if you don't specify primary constructor, compiler creates a default primary constructor. All the statements of class body treated as part of constructor. It is also known as default constructor.

21) What is method overloading in Scala?

Scala provides method overloading feature which allows us to define methods of same name but having different parameters or data types. It helps to optimize code. You can achieve method overloading either by using different parameter list or different types of parameters.

22) What is this in Scala?

In Scala, this is a keyword and used to refer current object. You can call instance variables, methods, constructors by using this keyword.

23) What is Inheritance?

Inheritance is an object oriented concept which is used to reusability of code. You can achieve inheritance by using extends keyword. To achieve inheritance a class must extend to other class. A class which is extended called super or parent class. a class which extends class is called derived or base class.

24) What is method overriding in Scala?

When a subclass has the same name method as defined in the parent class, it is known as method overriding. When subclass wants to provide a specific implementation for the method defined in the parent class, it overrides method from parent class.

In Scala, you must use either override keyword or override annotation to override methods from parent class.

25) What is final in Scala?

Final keyword in Scala is used to prevent inheritance of super class members into derived class. You can declare final variable, method and class also.

26) What is final class in Scala?

In Scala, you can create final class by using final keyword. Final class can't be inherited. If you make a class final, it can't be extended further.

27) What is abstract class in Scala?

A class which is declared with abstract keyword is known as abstract class. An abstract class can have abstract methods and non-abstract methods as well. Abstract class is used to achieve abstraction.

28) What is Scala Trait?

A trait is like an interface with a partial implementation. In Scala, trait is a collection of abstract and non-abstract methods. You can create trait that can have all abstract methods or some abstract and some non-abstract methods.

29) What is trait mixins in Scala?

In Scala, trait mixins means you can extend any number of traits with a class or abstract class. You can extend only traits or combination of traits and class or traits and abstract class.

It is necessary to maintain order of mixins otherwise compiler throws an error.

30) What is access modifier in Scala?

Access modifier is used to define accessibility of data and our code to the outside world. You can apply accessibly to class, trait, data member, member method and constructor etc. Scala provides least accessibility to access to all. You can apply any access modifier to your code according to your requirement.

In Scala, there are only three types of access modifiers.

31) What is array in Scala?

In Scala, array is a combination of mutable values. It is an index based data structure. It starts from 0 index to n-1 where n is length of array.

Scala arrays can be generic. It means, you can have an Array[T], where T is a type parameter or abstract type. Scala arrays are compatible with Scala sequences - you can pass an Array[T] where a Seq[T] is required. Scala arrays also support all the sequence operations.

32) What is ofDim method in Scala?

Scala provides an ofDim method to create multidimensional array. Multidimensional array is an array which store data in matrix form. You can create from two dimensional to three, four and many more dimensional array according to your need.

33) What is String in Scala?

In Scala, string is a combination of characters or we can say it is a sequence of characters. It is index based data structure and use linear approach to store data into memory. String is immutable in Scala like java.

34) What is string interpolation in Scala?

Starting in Scala 2.10.0, Scala offers a new mechanism to create strings from your data. It is called string interpolation. String interpolation allows users to embed variable references directly in processed string literals. Scala provides three string interpolation methods: s, f and raw.

35) What does s method in Scala String interpolation?

The s method of string interpolation allows us to pass variable in string object. You don't need to use + operator to format your output string. This variable is evaluated by compiler and variable is replaced by value.

36) What does f method in Scala String interpolation?

The f method is used to format your string output. It is like printf function of C language which is used to produce formatted output. You can pass your variables of any type in the print function.

37) What does raw method in Scala String interpolation?

The raw method of string interpolation is used to produce raw string. It does not interpret special char present in the string.

38) What is exception handling in Scala?

Exception handling is a mechanism which is used to handle abnormal conditions. You can also avoid termination of your program unexpectedly.

Scala makes "checked vs unchecked" very simple. It doesn't have checked exceptions. All exceptions are unchecked in Scala, even SQLException and IOException.

39) What is try catch in Scala?

Scala provides try and catch block to handle exception. The try block is used to enclose suspect code. The catch block is used to handle exception occurred in try block. You can have any number of try catch block in your program according to need.

40) What is finally in Scala?

The finally block is used to release resources during exception. Resources may be file, network connection, database connection etc. the finally block executes guaranteed.

41) What is throw in Scala?

You can throw exception explicitly in you code. Scala provides throw keyword to throw exception. The throw keyword mainly used to throw custom exception.

42) What is exception propagation in Scala?

In Scala, you can propagate exception in calling chain. When an exception occurs in any function it looks for handler. If handler not available there, it forwards to caller method and look for handler there. If handler present there, handler catch that exception. If handler not present it moves to next caller method in calling chain. This whole process is known as exception propagation.

43) What is throws in Scala?

Scala provides throws keyword to declare exception. You can declare exception with method definition. It provides information to the caller function that this method may throw this exception. It helps to caller function to handle and enclose that code in try-catch block to avoid abnormal termination of program. In Scala, you can either use throws keyword or throws annotation to declare exception.

44) What is custom exception in Scala?

In Scala, you can create your own exception. It is also known as custom exceptions. You must extend Exception class to while declaring custom exception class. You can create your own message in custom class.

45) What is collection in Scala?

Scala provides rich set of collection library. It contains classes and traits to collect data. These collections can be mutable or immutable. You can use them according to your requirement.

46) What is traversable in Scala collection?

It is a trait and used to traverse collection elements. It is a base trait for all Scala collections. It contains the methods which are common to all collections.

47) What does Set in Scala collection?

It is used to store unique elements in the set. It does not maintain any order for storing elements. You can apply various operations on them. It is defined in the Scala.collection.immutable package.

48) What does SortedSet in Scala collection?

In Scala, SortedSet extends Set trait and provides sorted set elements. It is useful when you want sorted elements in the Set collection. You can sort integer values and string as well.

It is a trait and you can apply all the methods defined in the traversable trait and Set trait.

49) What is HashSet in Scala collection?

HashSet is a sealed class. It extends AbstractSet and immutable Set trait. It uses hash code to store elements.

It neither maintains insertion order nor sorts the elements.

50) What is BitSet in Scala?

Bitsets are sets of non-negative integers which are represented as variable-size arrays of bits packed into 64-bit words. The memory footprint of a bitset is determined by the largest number stored in it. It extends Set trait.

51) What is ListSet in Scala collection?

In Scala, ListSet class implements immutable sets using a list-based data structure.

Elements are stored internally in reversed insertion order, which means the newest element is at the head of the list. It maintains insertion order.

This collection is suitable only for a small number of elements.

52) What is Seq in Scala collection?

Seq is a trait which represents indexed sequences that are guaranteed immutable. You can access elements by using their indexes. It maintains insertion order of elements.

Sequences support a number of methods to find occurrences of elements or subsequences.

It returns a list.

53) What is Vector in Scala collection?

Vector is a general-purpose, immutable data structure. It provides random access of elements. It is good for large collection of elements.

It extends an abstract class AbstractSeq and IndexedSeq trait.

54) What is List in Scala Collection?

List is used to store ordered elements. It extends LinearSeq trait. It is a class for immutable linked lists. This class is good for last-in-first-out (LIFO), stack-like access patterns.

It maintains order, can contain duplicates elements.

55) What is Queue in Scala Collection?

Queue implements a data structure that allows inserting and retrieving elements in a first-in-first-out (FIFO) manner.

In Scala, Queue is implemented as a pair of lists. One is used to insert the elements and second to contain deleted elements. Elements are added to the first list and removed from the second list.

56) What is stream in Scala?

Stream is a lazy list. It evaluates elements only when they are required. This is a feature of Scala. Scala supports lazy computation. It increases performance of your program.

57) What does Map in Scala Collection?

Map is used to store elements. It stores elements in pairs of key and values. In Scala, you can create map by using two ways either by using comma separated pairs or by using rocket operator.

58) What does ListMap in Scala?

This class implements immutable maps by using a list-based data structure. It maintains insertion order and returns ListMap. This collection is suitable for small elements.

You can create empty ListMap either by calling its constructor or using ListMap.empty method.

59) What is tuple in Scala?

A tuple is a collection of elements in ordered form. If there is no element present, it is called empty tuple. You can use tuple to store any type of data. You can store similar type to mix type data. You can return multiple values by using tuple in function.

60) What is singleton object in Scala?

Singleton object is an object which is declared by using object keyword instead by class. No object is required to call methods declared inside singleton object.

In Scala, there is no static concept. So Scala creates a singleton object to provide entry point for your program execution.

61) What is companion object in Scala?

In Scala, when you have a class with same name as singleton object, it is called companion class and the singleton object is called companion object.

The companion class and its companion object both must be defined in the same source file.

62) What are case classes in Scala?

Scala case classes are just regular classes which are immutable by default and decomposable through pattern matching.

It uses equal method to compare instance structurally.

It does not use new keyword to instantiate object.

63) What is file handling in Scala?

File handling is a mechanism of handling file operations. Scala provides predefined methods to deal with file. You can create, open, write and read file. Scala provides a complete package scala.io for file handling.

|

Wednesday, 27 June 2018

Monday, 25 June 2018

Data Lake - the evolution of data processing

This post examines the evolution of data processing in data lakes, with a particular focus on the concepts, architecture and technology criteria behind them.

In recent years, rapid technology advancements have led to a dramatic increase in information traffic. Our mobile networks have increased coverage and data throughput. Landlines are being slowly upgraded from copper to fiber optics.

Thanks to this, more and more people are constantly online through various devices using many different services. Numerous cheap information sensing IoT devices increasingly gather data sets - aerial information, images, sound, RFID information, weather data, etc.

All this progress results in more data being shared online. Data sets have rapidly risen in both volume and complexity, and traditional data processing applications started being inadequate to deal with.

This vast volume of data has introduced new challenges in data capturing, storage, analysis, search, sharing, transfer, visualization, querying, updating, and information privacy.

Inevitably, these challenges required completely new architecture design and new technologies, which help us to store, analyze, and gain insights from these large and complex data sets.

Here I will present the Data Lake architecture, which introduces an interesting twist on storing and processing data. Data Lake is not a revolution in the big data world, a one-size-fits-all solution, but a simple evolutionary step in data processing, which naturally came to be.

Concepts

"(Data Lake is) A centralized, consolidated, persistent store of raw, un-modeled and un-transformed data from multiple sources, without an explicit predefined schema, without externally defined metadata, and without guarantees about the quality, provenance and security of the data."

This definition shows one of the key concepts of Data Lake - it stores raw, unaltered data. Traditionally, we would try to filter and structure data before it comes into our data warehouse.

Two things emerge from this - structuring and transforming data on ingestion incurs a performance hit, and potential data loss. If we try to do complex computations on a large amount of incoming data, we will most likely have serious performance issues. If we try to structure data on ingestion, we might realize later on that we need pieces of data discarded during structuring.

The thing is, with vast and complex data, it is most likely that we won't know what insights you can extract from it. We don't know what value, if any, collected data will bring to our business. If we try to guess, there is a fair chance of guessing wrong.

What do we do then? We store raw data. Now, we don't want to just throw it in there, as that will lead to a data swamp - a pool of stale data, without any information on what it represents. Data should be enriched with metadata, describing its origin, ingestion time, etc. We can also partition data on ingestion, which makes processing more efficient later on. If we don't get the right partitioning on the first try, we'll still have all of it, and can re-partition it without any data loss.

Ingesting data in this way leads to no quality guarantees at all, and with no access control. If we don't really know what is in there, we can't set up efficient security around it. All of this will come later, when data is processed. Everybody with access to Data Lake will potentially have unrestricted access to raw data.

We can introduce a trivial security system scheme, by enabling selective access to data partitions. This is a rather coarse-grained security, and it may, or may not, bring any value.

Architecture

The previous definition focused on emphasizing the core concept of Data Lake system. Let's expand it a bit:

"Data Lake supports agile data acquisition, natural storage model for complex multi-structured data, support for efficient non-relational computation, and provision for cost-effective storage of large and noisy data-sets."

This addition to our first definition gives us a clearer image of what Data Lake should be.

We should be able to gather data from different sources, using different protocols, to store both relational and non-relational data, and offer API for analyzing and processing. All of this should be flexible enough so it can scale both up and down.

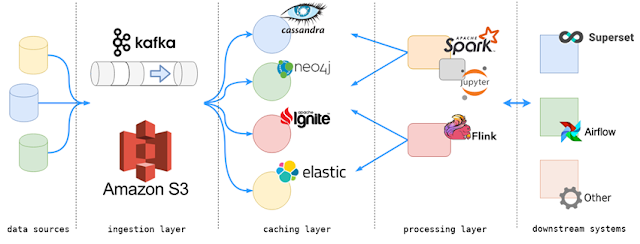

Usually, Data Lake is separated in three layers: ingestion, caching, and processing.

Any of these layers can contain multiple technologies, with each of them offering different APIs and functionality. Every application/technology in each of these layers should be able to benefit from both horizontal and vertical scaling, and to be fault-tolerant to a degree. Each of these systems should be orchestrated using resource manager, to offer elasticity in cost, capacity and fault tolerance.

The purpose of the ingestion layer is to act as a storage layer for raw data that is incoming to Data Lake system. This layer must not force the user to apply schema to incoming data, but can offer it as an option. We might want to enrich data with metadata, so we might want to structure it a bit, after all.

Incoming data usually consists of time series, messages, or events. This data is usually gathered from sensors of Iot devices, weather stations, industrial systems, medical equipment, warehouses, social networks, media, user portals, etc.

Support for data partitioning, even though not mandatory, is highly encouraged, as we may partition ingestion data to try and improve performance when processing it.

The purpose of the caching layer is to temporarily or permanently store processed (or pre-processed) data, relational or non-relational. Data which is stored here is either ready for visualization/consumption by external systems, or is prepared for further processing.

Applications residing in the processing layer will take data from the ingestion layer, process it, structure it in some way, and store it here, optionally partitioning it in a certain manner.

It is usual to have a data set, used by downstream systems, scattered around different storage systems found inside the caching layer (relational, NoSQL, indexing engines, etc.).

The purpose of the processing layer is to offer one or more platforms for distributed processing and analysis of large data sets. It can access data stored in both the ingestion and caching layer.

Usually, technologies found here are implemented using master-worker architecture - master node analyzes data processing algorithms coded in applications which are submitted to it, creates strategy of execution, and then distributes workload over multiple worker instances, so they can execute it in parallel. With this type of architecture, the processing layer can easily be scaled to fit the needs of computational power required for specific use-case.

Technologies Criteria

Looking back at previously stated Data Lake properties, let's define the criteria that the technology stack used to implement it needs to meet.

- Scalability. Technologies need to be able to accept a vast and increasing amount of data in a performant way.

- Durability. Data needs to be safely persisted, and prevention of data loss needs to be supported (by using replication, backups, or some other mechanism)

- Support for ingesting unstructured (schema-less) data.

- Support for efficient handling of stream-like data (time series, events/messages).

- Support for data erasure, to be able to remove unneeded data, or data that is not allowed to be present, due to privacy/legal concerns.

- Support for data updates.

- Support for different access patterns - random access, search/query languages, bulk reads. Different downstream systems might have different requirements in accessing data, depending on their use-cases.

Conclusion

In this part, we explored the core idea behind Data Lake, its architecture, and functional requirements that it needs to satisfy.

In the next part of this series, we will examine what technologies can be used to implement a fully functional Data Lake system.

Source:house of boats

Thursday, 21 June 2018

Hive HBase Integration

Hive Hbase integration

Hive

The Apache Hive ™ data warehouse software facilitates querying and managing large datasets residing in distributed storage. Hive provides a mechanism to project structure onto this data and query the data using a SQL-like language called HiveQL. At the same time this language also allows traditional map/reduce programmers to plug in their custom mappers and reducers when it is inconvenient or inefficient to express this logic in HiveQL.

Hbase

-------------------------------------------------------------------------------------------------------------

-------------------------------------------------------------------------------------------------------------

-------------------------------------------------------------------------------------------------------------

-------------------------------------------------------------------------------------------------------------

-------------------------------------------------------------------------------------------------------------

Hive

The Apache Hive ™ data warehouse software facilitates querying and managing large datasets residing in distributed storage. Hive provides a mechanism to project structure onto this data and query the data using a SQL-like language called HiveQL. At the same time this language also allows traditional map/reduce programmers to plug in their custom mappers and reducers when it is inconvenient or inefficient to express this logic in HiveQL.

Hbase

Use Apache HBase when you need random, realtime read/write access to your Big Data. Apache HBase is an open-source, distributed, versioned, non-relational database modeled after Google's Bigtable: A Distributed Storage System for Structured Data by Chang et al. Just as Bigtable leverages the distributed data storage provided by the Google File System, Apache HBase provides Bigtable-like capabilities on top of Hadoop and HDFS.

Steps for hive and hbase integration

Step1: Create a table demotable and columnfamily as emp in hbase

create 'demotable','emp'

-------------------------------------------------------------------------------------------------------------

Step2:Add the following jar in hive shell

add jar /home/username/hbase-0.94.15/hbase-0.94.15.jar;

add jar /home/username/hbase-0.94.15/lib/protobuf-java-2.4.0a.jar;

add jar /home/username/hbase-0.94.15/lib/zookeeper-3.4.5.jar;

add jar /home/username/hbase-0.94.15/hbase-0.94.15-tests.jar;

add jar /home/username/hbase-0.94.15/lib/guava-11.0.2.jar;

list jars;

-------------------------------------------------------------------------------------------------------------

Step3:

set hbase.zookeeper.quorum=localhost;

-------------------------------------------------------------------------------------------------------------

Step4:Create an external table in hive as hivedemotable.

create external TABLE hivedemotable(empno int, empname string,empsal int,gender string) STORED BY'org.apache.hadoop.hive.hbase.HBaseStorageHandler' WITH SERDEPROPERTIES("hbase.columns.mapping" = ":key,emp:empname,emp:empsal,emp:gender") TBLPROPERTIES("hbase.table.name" = "demotable");

-------------------------------------------------------------------------------------------------------------

Step5: Overwrite the hivedemotable with existing hive table employee(you have to create this table with some values in hive with same columns) which has the data inside the table

insert overwrite table hivedemotable select empno, empname ,empsal, gender from employee;

-------------------------------------------------------------------------------------------------------------

Step6:Try this in hive shell

select * from hivedemotable;

-------------------------------------------------------------------------------------------------------------

Step7:Try this in hbase shell

scan 'demotable'

-------------------------------------------------------------------------------------------------------------

Now you can see the values of hivedemotable in demotable in hbase

Subscribe to:

Comments (Atom)